Many customers need to run massive language fashions (LLMs) domestically for extra privateness and management, and with out subscriptions, however till lately, this meant a trade-off in output high quality. Newly launched open-weight fashions, like OpenAI’s gpt-oss and Alibaba’s Qwen 3, can run immediately on PCs, delivering helpful high-quality outputs, particularly for native agentic AI.

This opens up new alternatives for college students, hobbyists and builders to discover generative AI functions domestically. NVIDIA RTX PCs speed up these experiences, delivering quick and snappy AI to customers.

Getting Began With Native LLMs Optimized for RTX PCs

NVIDIA has labored to optimize prime LLM functions for RTX PCs, extracting most efficiency of Tensor Cores in RTX GPUs.

One of many best methods to get began with AI on a PC is with Ollama, an open-source instrument that gives a easy interface for operating and interacting with LLMs. It helps the power to pull and drop PDFs into prompts, conversational chat and multimodal understanding workflows that embody textual content and pictures.

NVIDIA has collaborated with Ollama to enhance its efficiency and consumer expertise. The newest developments embody:

- Efficiency enhancements on GeForce RTX GPUs for OpenAI’s gpt-oss-20B mannequin and Google’s Gemma 3 fashions

- Help for the brand new Gemma 3 270M and EmbeddingGemma3 fashions for hyper-efficient retrieval-augmented era on the RTX AI PC

- Improved mannequin scheduling system to maximise and precisely report reminiscence utilization

- Stability and multi-GPU enhancements

Ollama is a developer framework that can be utilized with different functions. For instance, AnythingLLM — an open-source app that lets customers construct their very own AI assistants powered by any LLM — can run on prime of Ollama and profit from all of its accelerations.

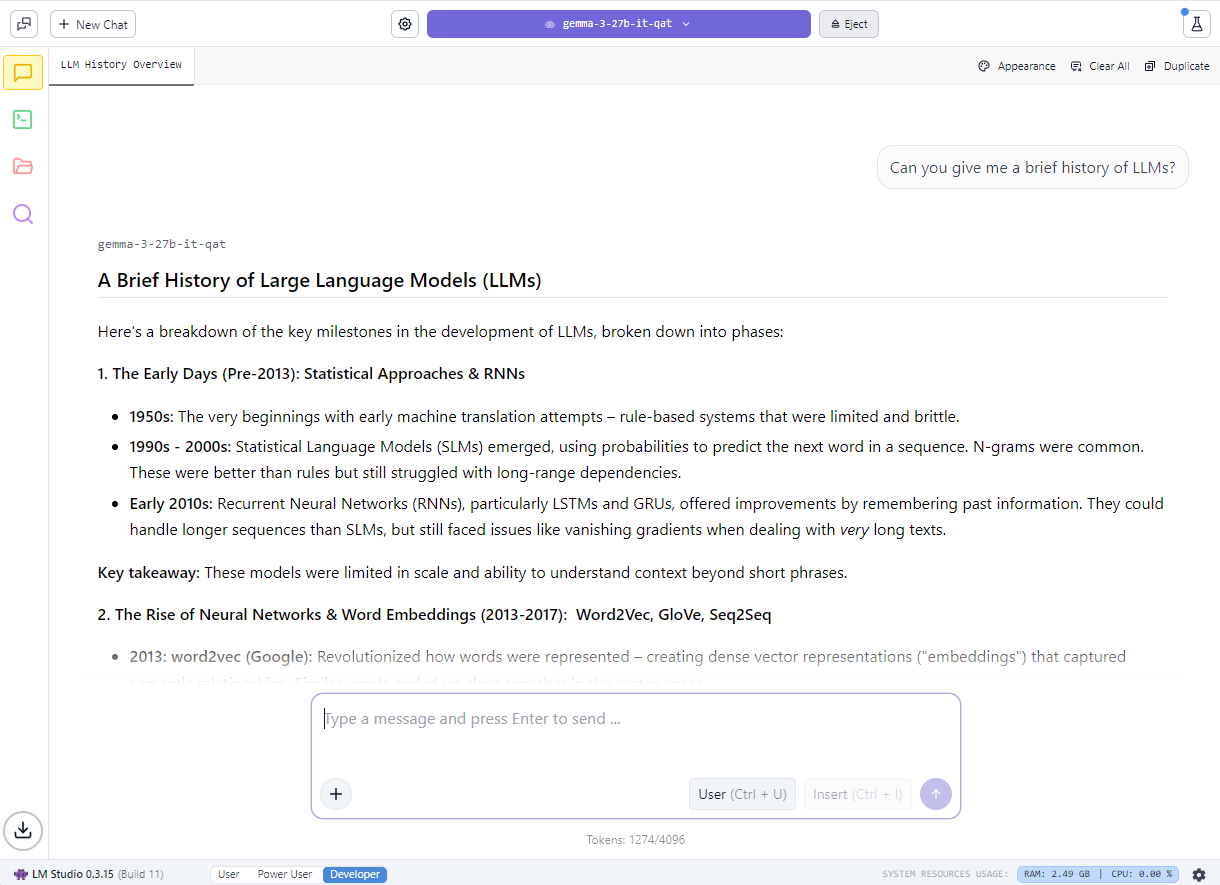

Fans can even get began with native LLMs utilizing LM Studio, an app powered by the favored llama.cpp framework. The app gives a user-friendly interface for operating fashions domestically, letting customers load totally different LLMs, chat with them in actual time and even serve them as native software programming interface endpoints for integration into customized tasks.

NVIDIA has labored with llama.cpp to optimize efficiency on NVIDIA RTX GPUs. The newest updates embody:

- Help for the newest NVIDIA Nemotron Nano v2 9B mannequin, which relies on the novel hybrid-mamba structure

- Flash Consideration now turned on by default, providing an as much as 20% efficiency enchancment in contrast with Flash Consideration being turned off

- CUDA kernels optimizations for RMS Norm and fast-div primarily based modulo, leading to as much as 9% efficiency enhancements for in style mannequin

- Semantic versioning, making it straightforward for builders to undertake future releases

Study extra about gpt-oss on RTX and the way NVIDIA has labored with LM Studio to speed up LLM efficiency on RTX PCs.

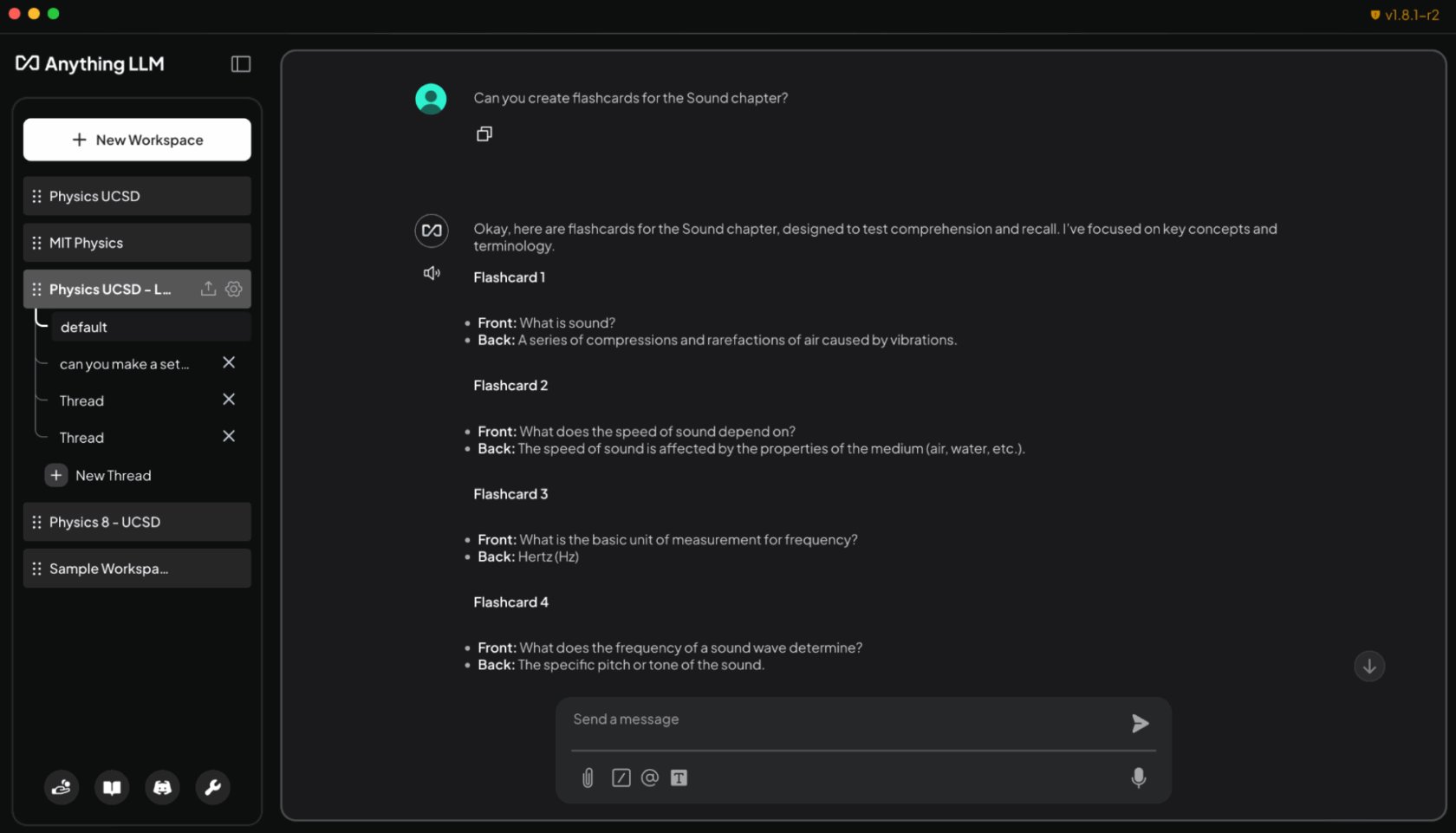

Creating an AI-Powered Research Buddy With AnythingLLM

Along with larger privateness and efficiency, operating LLMs domestically removes restrictions on what number of information could be loaded or how lengthy they keep out there, enabling context-aware AI conversations for an extended time period. This creates extra flexibility for constructing conversational and generative AI-powered assistants.

For college kids, managing a flood of slides, notes, labs and previous exams could be overwhelming. Native LLMs make it attainable to create a private tutor that may adapt to particular person studying wants.

The demo beneath reveals how college students can use native LLMs to construct a generative-AI powered assistant:

A easy manner to do that is with AnythingLLM, which helps doc uploads, customized information bases and conversational interfaces. This makes it a versatile instrument for anybody who needs to create a customizable AI to assist with analysis, tasks or day-to-day duties. And with RTX acceleration, customers can expertise even sooner responses.

By loading syllabi, assignments and textbooks into AnythingLLM on RTX PCs, college students can achieve an adaptive, interactive examine companion. They’ll ask the agent, utilizing plain textual content or speech, to assist with duties like:

- Producing flashcards from lecture slides: “Create flashcards from the Sound chapter lecture slides. Put key phrases on one aspect and definitions on the opposite.”

- Asking contextual questions tied to their supplies: “Clarify conservation of momentum utilizing my Physics 8 notes.”

- Creating and grading quizzes for examination prep: “Create a 10-question a number of alternative quiz primarily based on chapters 5-6 of my chemistry textbook and grade my solutions.”

- Strolling by way of robust issues step-by-step: “Present me clear up drawback 4 from my coding homework, step-by-step.”

Past the classroom, hobbyists and professionals can use AnythingLLM to organize for certifications in new fields of examine or for different related functions. And operating domestically on RTX GPUs ensures quick, non-public responses with no subscription prices or utilization limits.

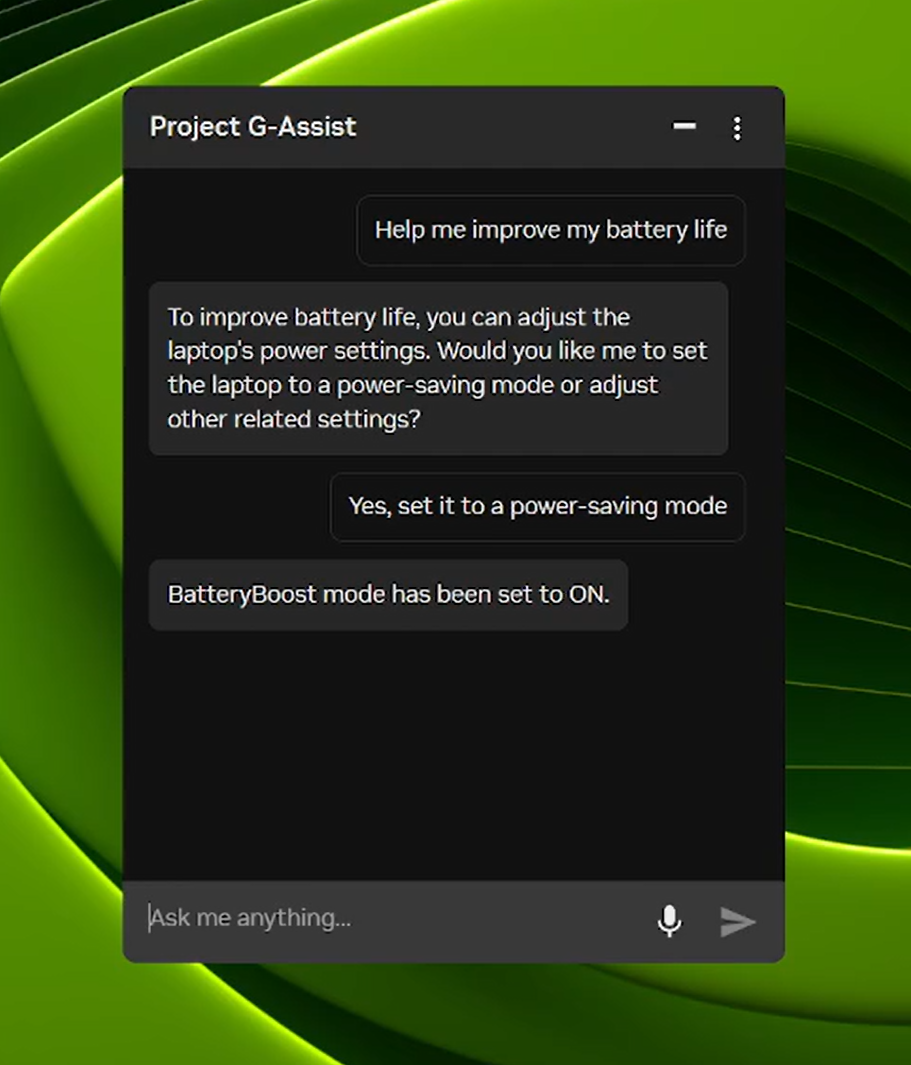

Undertaking G-Help Can Now Management Laptop computer Settings

Undertaking G-Help is an experimental AI assistant that helps customers tune, management and optimize their gaming PCs by way of easy voice or textual content instructions — without having to dig by way of menus. Over the subsequent day, a brand new G-Help replace will roll out by way of the house web page of the NVIDIA App.

Constructing on its new, extra environment friendly AI mannequin and assist for almost all of RTX GPUs launched in August, the brand new G-Help replace provides instructions to regulate laptop computer settings, together with:

- App profiles optimized for laptops: Mechanically alter video games or apps for effectivity, high quality or a stability when laptops aren’t linked to chargers.

- BatteryBoost management: Activate or alter BatteryBoost to increase battery life whereas protecting body charges clean.

- WhisperMode management: Reduce fan noise by as much as 50% when wanted, and return to full efficiency when not.

Undertaking G-Help can be extensible. With the G-Help Plug-In Builder, customers can create and customise G-Help performance by including new instructions or connecting exterior instruments with easy-to-create plugins. And with the G-Help Plug-In Hub, customers can simply uncover and set up plug-ins to broaden G-Help capabilities.

Take a look at NVIDIA’s G-Help GitHub repository for supplies on get began, together with pattern plug-ins, step-by-step directions and documentation for constructing customized functionalities.

#ICYMI — The Newest Developments in RTX AI PCs

🎉Ollama Will get a Main Efficiency Enhance on RTX

Newest updates embody optimized efficiency for OpenAI’s gpt-oss-20B, sooner Gemma 3 fashions and smarter mannequin scheduling to scale back reminiscence points and enhance multi-GPU effectivity.

🚀 Llama.cpp and GGML Optimized for RTX

The newest updates ship sooner, extra environment friendly inference on RTX GPUs, together with assist for the NVIDIA Nemotron Nano v2 9B mannequin, Flash Consideration enabled by default and CUDA kernel optimizations.

⚡Undertaking G-Help Replace Rolls Out

Obtain the G-Help v0.1.18 replace by way of the NVIDIA App. The replace options new instructions for laptop computer customers and enhanced reply high quality.

⚙️ Home windows ML With NVIDIA TensorRT for RTX Now Geneally Out there

Microsoft launched Home windows ML with NVIDIA TensorRT for RTX acceleration, delivering as much as 50% sooner inference, streamlined deployment and assist for LLMs, diffusion and different mannequin varieties on Home windows 11 PCs.

🌐 NVIDIA Nemotron Powers AI Improvement

The NVIDIA Nemotron assortment of open fashions, datasets and methods is fueling innovation in AI, from generalized reasoning to industry-specific functions.

Plug in to NVIDIA AI PC on Fb, Instagram, TikTok and X — and keep knowledgeable by subscribing to the RTX AI PC publication.

Comply with NVIDIA Workstation on LinkedIn and X.

See discover relating to software program product data.